The OECD AI Incidents Monitor: an evidence base for effective AI policy

AI incidents are increasing rapidly worldwide

While AI provides tremendous benefits, some uses of AI produce dangerous results that can harm individuals, businesses, and societies. These negative outcomes, captured under the umbrella term “AI incidents”, are diverse in nature and happen across sectors and industries.

To give a few examples, some algorithms incorporate biases that discriminate against people for their gender, race or socioeconomic condition. Others manipulate individuals by influencing their choices for what to believe or how to vote. On a different level but just as critical, some skilled jobs are entrusted to AI, increasing unemployment in some sectors and causing harm to individuals and professions.

These issues are known and reported ad hoc at this stage without a consistent method. With the little data that exist, we can see that incident reports are increasing rapidly. The most visible are incidents reported in the media, and they are only the tip of the iceberg.

AI actors need to identify and document these incidents in ways that do not hinder AI’s positive outcomes. Failure to address these risks responsibly and quickly will exacerbate the lack of trust in our societies and could push our democracies to the breaking point. Importantly, data about past incidents can help us to prevent similar incidents from happening in the future.

What is an “AI incident”?

Employment risks, bias, discrimination and social disruption are obvious harms, but what do they have in common? What about the hazards, less obvious or even precursor events that could lead to harm? If experts worldwide are going to track and monitor incidents, the first thing to do is agree upon what an AI incident is.

This week, the OECD published a stocktaking paper as a first step towards a common understanding of AI incidents. The OECD.AI Expert Group on AI Incidents brings together experts from policy, academia, the technical community and important initiatives like the Responsible AI Collaborative. It is currently developing a shared terminology that will become the foundation for reporting incidents in a compatible way across jurisdictions. From there, governments and other actors can establish an international framework for reporting incidents and hazards.

AIM is the first step to producing evidence and foresight on AI incidents for sound policymaking and international cooperation

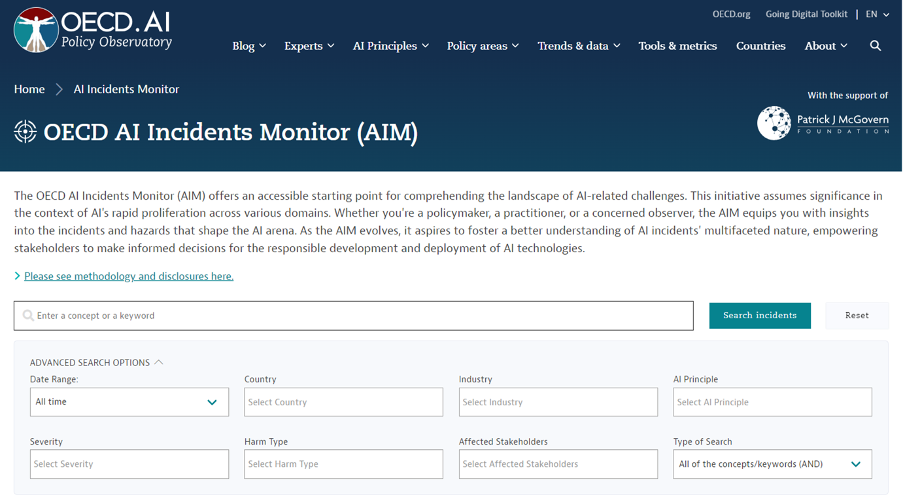

The OECD.AI Observatory released the AI Incidents Monitor, beta version, AIM. Developing the monitor will fulfil two crucial needs.

First, it will provide real-time evidence to support policy and regulatory decisions about AI, especially for real risks and the types of AI systems that cause them.

Second, it will inform work for a common incident reporting framework. While as incident reporting may be voluntary in some jurisdictions but mandatory in others, there still needs to be a central repository for all incidents and a shared methodology for reporting them.

AIM uses a platform global media monitoring platform to scan media from 150,000 news sources worldwide in real-time and collect over one million news stories per day to extract information about AI incident events.

AIM: an AI system to detect and categorise incidents from the news

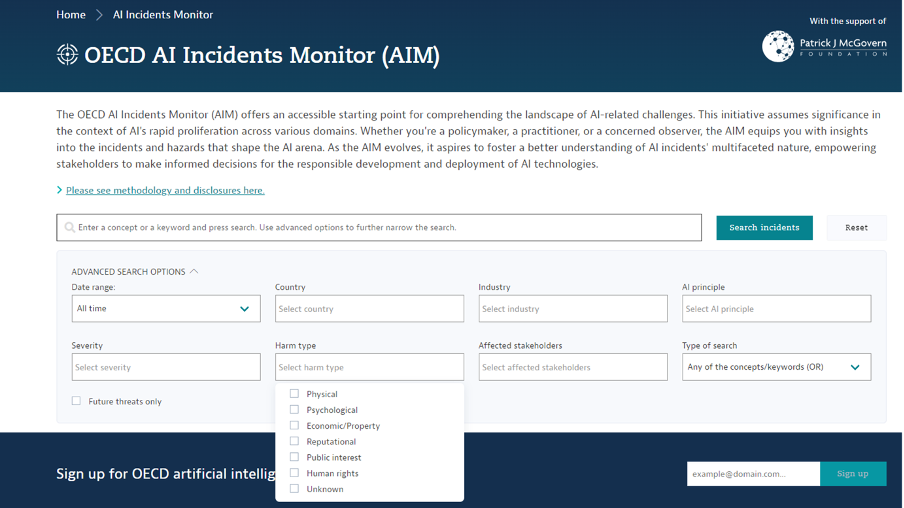

One of the first steps was to build an AI system that would automate the difficult task of detecting AI incidents from real-time news articles. The second step involved categorising these incidents according to different criteria, such as sector, country, type of stakeholders affected, severity, type of harm, etc. as seen in the filters below.

The filter criteria and subcategories mirror work in the OECD Framework for Classifying AI Systems, ensuring that AIM is consistent with previous OECD projects and frameworks. Filters such as Severity and Harm type are as consistent as possible with the findings from the stocktaking report.

Here is an example of the display of the different categories of the harm type criteria:

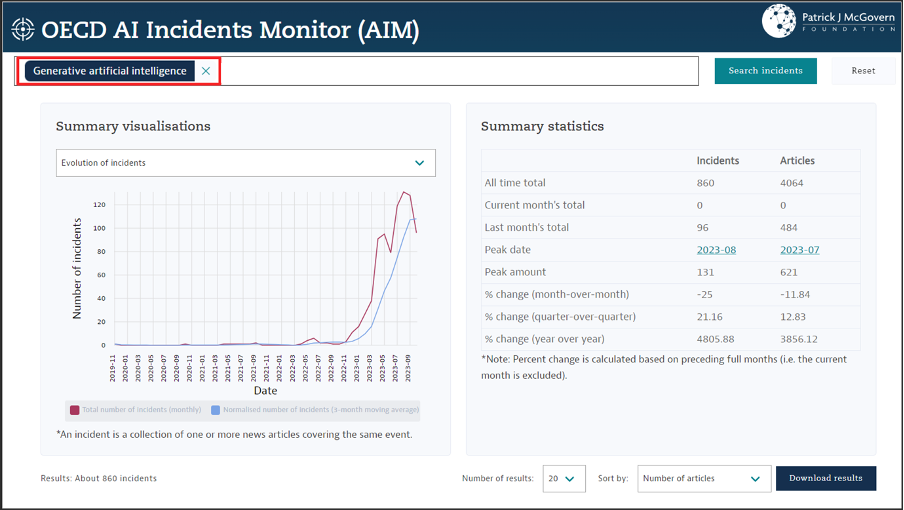

Example results for Generative AI

A search for the concept “Generative AI” shows AIM’s graphic interphase with the evolution of reported incidents related to this concept over time. It is no surprise to see that these incidents have grown rapidly since November 2022.

The monitor also provides some summary statistics related to search terms – the number of incidents and articles, their peaks and their growth percentage over time.

Public policymakers face significant challenges in tackling AI-related risks regardless of the approach to regulating AI. They need a solid evidence base to guide their work as they assess situations and formulate effective AI policies and standards.

Effective regulations, standards and safeguards must be enacted locally but stay globally interoperable. Much like climate change, AI is global, so everyone must contribute to efforts to resolve AI-related issues: no single country or economic actor can tackle them alone. Therefore, policies, standards and regulations must be interoperable, requiring coordinated international action.

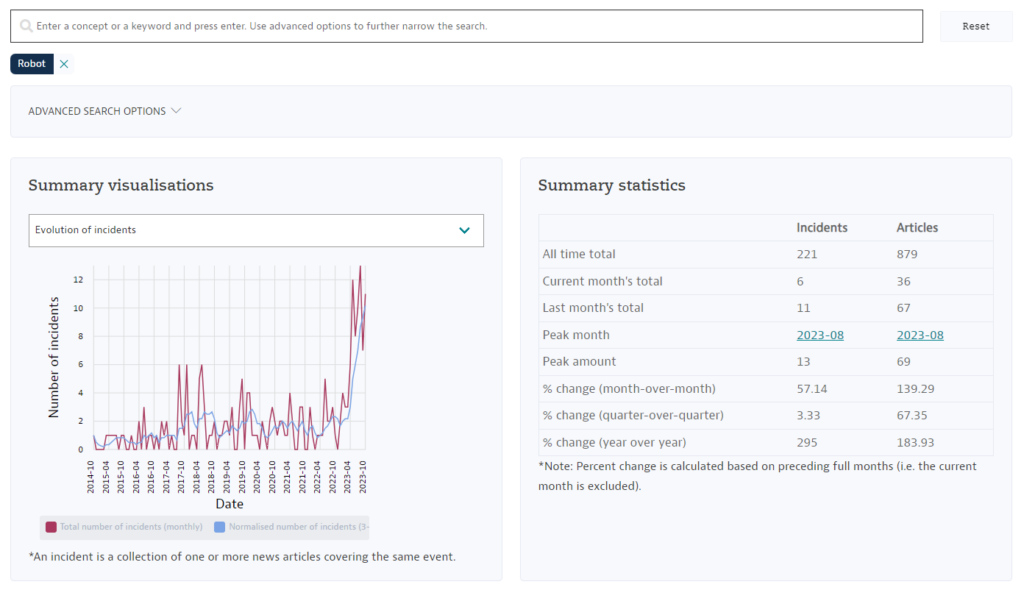

A future filter

The monitor also lets users look at predictions for future threats by clicking on the future threats only checkbox. Users can search for future threats related to Robots and see only news that looks to the future.

AIM to evolve into a global AI incidents monitor for standardised AI incident reporting

In the long run, the AI Incident tracker will evolve into a tool pooling standardised information about AI incidents and controversies worldwide.

Current work to establish a common framework for incident reporting will become part of AIM to ensure consistency and global interoperability. Developers will be able to link incidents to existing tools in the Catalogue of Tools & Metrics for Trustworthy AI, helping avoid similar incidents and allowing all actors to avoid reproducing known incidents across jurisdictions for more trustworthy AI.