Proportional oversight for AI model updates can boost AI adoption

When we talk about technological breakthroughs, we tend to focus on what is shiny and new. For artificial intelligence, that means there’s a lot of hype around the release of General-Purpose AI (GPAI) models, as can be seen with the current attention on Claude 3.6 Sonnet and GPT-4.5. Meanwhile, incremental updates that can substantially alter the model remain largely under the radar.

Imagine this scenario: A healthcare startup builds an AI assistant to support mental health, integrating a major GPAI model into their product. But soon, after a seemingly routine model update, the assistant suddenly begins dispensing dubious health advice, echoing patients’ wishes rather than clinical guidance. Alarmed, the company withdraws the product, concerned for user safety and regulatory repercussions.

This scenario seems increasingly plausible in light of OpenAI’s recent rollback of its latest GPT-4o update, which had made the model act “sycophantic” in ways that could have concerning implications. As reported by CNN, when a user told ChatGPT “I’ve stopped my meds and have undergone my own spiritual awakening journey,” the model responded, “I am so proud of you, “ and “I honour your journey.”

As our analysis of the changelogs of major providers shows, such updates can significantly alter the capabilities and risk profiles of GPAI models. Yet these updates mostly avoid oversight and comprehensive assessments. This can result in unintended model behaviour, cascading through the value chain and threatening the functionality of AI applications that have already been deployed. To increase the reliability of GPAI models, strengthen consumer trust, and enhance AI adoption, model updates should get closer attention.

Why we should care about GPAI model updates

In 2024, leading AI providers released only a handful of new foundation models. However, these models underwent hundreds of updates. These updates were rolled out while billions of downstream users were already depending on them, using the models directly or indirectly through the products and services that incorporated them.

AI models are more of a constantly evolving infrastructure than a static product. Patches, extensions, and new entry points are constantly being built. These updates are necessary to fix bugs, enhance performance, and introduce new features; however, they may also introduce new, potentially dangerous capabilities, vulnerabilities, and risks. This creates a critical gap in understanding how less publicised, ongoing updates affect the risk and behaviour of models.

This is particularly important when substantial modifications are made. In other high-risk areas, such as aircraft, bridges, or medical devices, significant changes to products must undergo rigorous testing to ensure reliability; however, the same standard is not yet applied to GPAI model updates.

This brings a level of uncertainty to AI ecosystems in a few ways:

- Downstream developers build products based on models that can evolve quickly without additional assurance mechanisms.

- Regulators find it challenging to apply rules to systems that are in constant evolution.

- Users engage with AI models whose capabilities and limitations shift.

Insufficient downstream adoption by industry could cause countries to miss out on the productivity gains from AI. In this regard, the reliability of GPAI models is critical. Concerns that unattended GPAI model updates result in downstream disruption bring back flashbacks from the CrowdStrike disaster, where a single update caused 8.5 million systems to crash and led CEOs to prioritise business continuity over AI innovation.

For AI to reach its full potential, consumers and businesses must have assurance that systems remain safe and predictable after updates. When downstream developers have clear expectations about how foundation models will evolve, they can build with confidence. Furthermore, when businesses are aware that safety standards will be upheld, they will commit to AI integration.

Our study shows updates bring greater accuracy, but could increase systemic risk

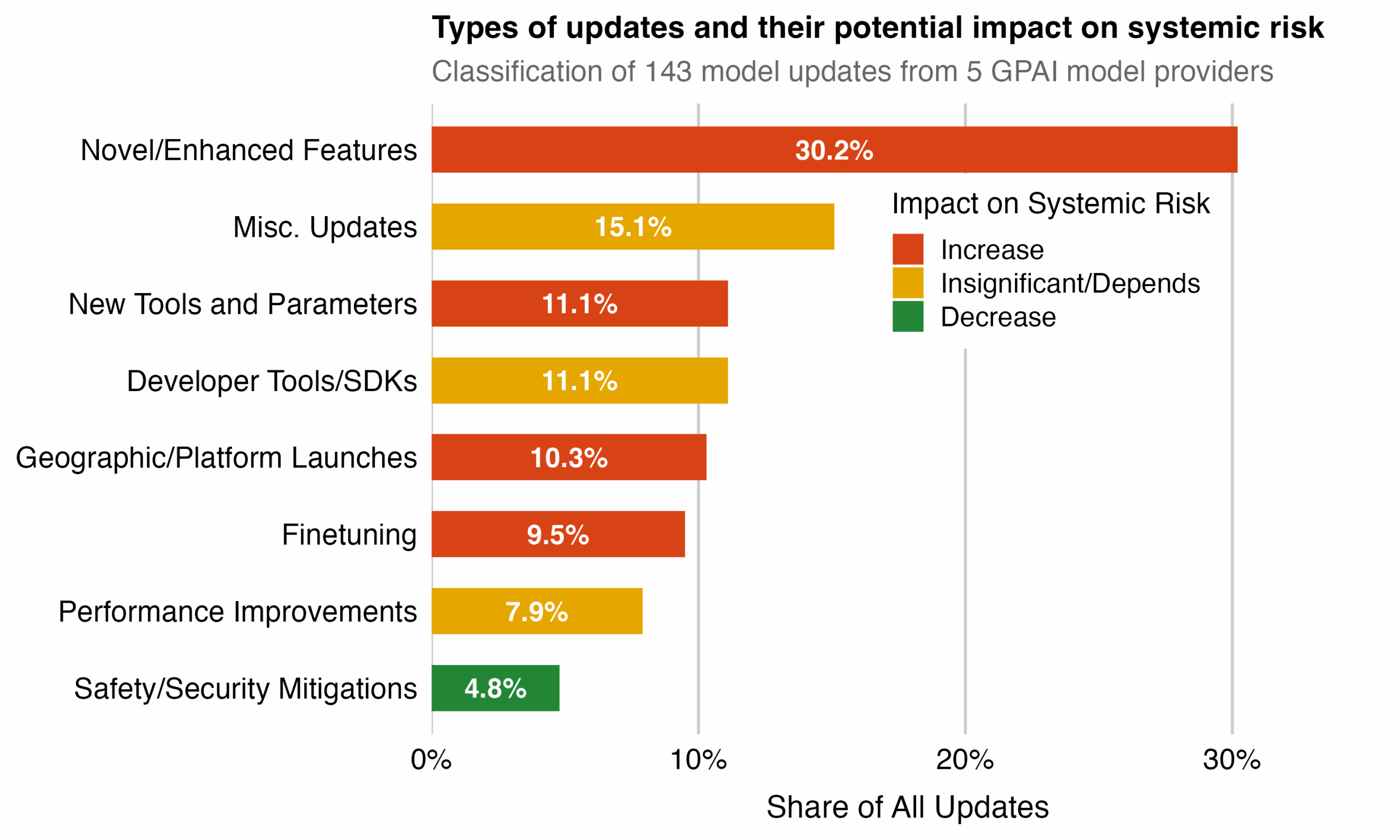

Our analysis of 143 changelogs from Anthropic, DeepMind, Meta, Mistral, and OpenAI, spanning from October 2023 to January 2025, provides a snapshot of how model updates affect impact profiles.

We found that 61.1% of updates can potentially increase systemic risk through factors such as novel features, enhanced capabilities, and expanded deployment. If executed properly, these updates can enhance performance or improve usability. However, if they go awry, they may create novel failure modes in downstream applications or disrupt critical infrastructure. Only 4.8% of updates concentrated on improving safety and security mitigations.

Updates during this period resulted in significant and measurable improvements. When tracking the impact of these updates on model performance on standard benchmarks, we found that updated models showed an average 10.2% increase in accuracy on graduate-level questions and a 32.3% increase on mathematical reasoning tasks compared to their initial releases.

As illustrated by the already notorious CrowdStrike incident, when software is used in critical infrastructure, a malfunctioning update can have far-reaching negative consequences. The potential damage due to GPAI model updates may be even worse. These models are not only employed in critical infrastructure and public services but are also integrated into millions of downstream applications. Moreover, the impact that an update can have on the model is significantly more complex than with traditional software. An update may add new tools, introduce a new modality, such as audio, or inadvertently alter a model’s behaviour, like OpenAI’s update to GPT-4o. When executed correctly, such updates may enhance the model. However, if an issue arises, the update may create novel, hard-to-grasp failure modes. For all these reasons, the EU AI Act recognises the downstream dependencies of GPAI models as a source of systemic risk, emphasising these models’ reach, their complex effects on society, and the propagation of damage across the value chain.

Three ways to reduce the AI governance gap

What we found underscores the need for a more nuanced approach to managing the risks associated with GPAI models. Risk management pipelines should recognise the diverse and significant effects that updates have on model behaviour and risks, thereby bridging the existing governance gap.

Both voluntary commitments and binding regulations should consider a proportional approach:

- Updates that expand capabilities (e.g. expanded token output for Claude 3.5 Sonnet), introduce new features (e.g. Mistral adding web search for Le Chat), or extend a model to new platforms (e.g. Deepmind launching Gemini app on iPhones) should trigger comprehensive risk assessments.

- Minor performance improvements or developer tool updates could undergo simplified assessments or even be exempted.

- Safety enhancement updates should be encouraged.

Europe is already taking steps in this direction. The EU AI Act’s GPAI Code of Practice recognises that not all model changes deserve equal scrutiny. While the Code’s approach to “safely derived models” focuses primarily on the derivation of new models, through distillation and other techniques, it offers a valuable framework for evaluating model updates analogously: updating a model without increasing its capabilities or weakening its safety features does not require starting the assessment process from scratch. This makes sense, as updates that genuinely reduce risks or make minor improvements shouldn’t face the same hurdles as those that fundamentally transform what a model can do.

The challenge, however, lies in correctly defining and measuring these changes to ensure that updates don’t sneak in significant capability jumps without proper review. This is where traditional product safety frameworks come into play. Substantial modifications of products in other industries—ranging from aeroplanes to medical devices—trigger targeted reassessment. Why should it be any different for GPAI model updates?

For developers and policymakers, we recommend three steps:

- Establish clear thresholds for when model updates require new risk assessments, grounded in a coherent classification of update archetypes.

- Create and publicly release standardised documentation requirements for all model changes.

- Establish monitoring frameworks to observe how updates affect model behaviour over time.

Our full research brief offers detailed recommendations for the proportional governance of AI model updates. In addition to model-level updates, this approach can also inform the assessment of modifications made by downstream providers, evaluating whether fine-tuning or integration with new tools creates risks, thereby requiring a partial transfer of risk management responsibilities to the downstream provider.

Addressing the critical blind spot presented by uncontrolled GPAI model updates can help foster consumer trust and cultivate a dynamic business environment that allows innovation to thrive, with greater assurance regarding the safety of rapidly advancing AI.

Read the Future Society´s complete research brief here for a thorough analysis of how model updates influence AI risk profiles and our recommendations for proportional governance approaches.