Is AI a wake-up call for financial services in the UK?

AI in finance means rethinking risk management

The growth of artificial intelligence (AI) in financial services accelerated during the pandemic, raising questions about whether the industry’s risk management and governance systems can keep up.

While it is clear that households, firms, and the wider economy could benefit from more tailored financial services, lower costs, and increased competition, the use of AI also presents novel challenges and risks. For example, flawed credit risk profile assessments could lead to consumers being priced out incorrectly, or financial exclusion. This could also present a reputational risk for firms, and poor credit algorithms could even lead to financial losses.

The use of large datasets that are hard to validate at a granular level could also lead to biased and unfair decisions. Given the potential losses, all stakeholders need to be confident that AI is safe and responsible in financial services, and that no particular demographic is excluded from markets or faces unfair prices.

The UK’s AI Public-Private Forum (AIPPF)

Statistical and mathematical modelling have long been intrinsic to the financial industry. Our annuities, mortgage rates and ‘why I pay more for insurance than you do’ all depend on such models. What has changed is the way the industry has become digitalised, with huge datasets, more sophisticated algorithms, and vastly increased computing power. Most recently, financial services have started using advanced AI techniques to conduct a range of tasks, including anti-money laundering, fraud detection, lending, and insurance underwriting. New opportunities and sources of data along with decisions on algorithmic maintenance and training represent further complex challenges that must be managed. But how is the complexity of AI modelling, including the associated data challenges, different and what does that mean for firms and regulators?

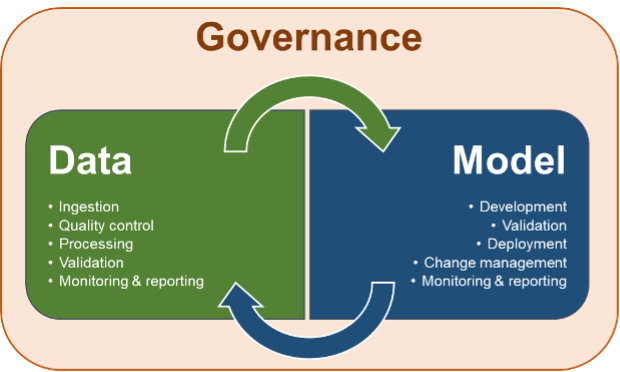

To answer this question and others, the UK’s financial services regulators, the Bank of England (BoE) and Financial Conduct Authority (FCA), set up the AI Public-Private Forum (AIPPF) in late 2020. This year-long project’s objective was to start an open conversation with key stakeholders about the challenges of using AI in financial services and how to address or overcome those. The project brought together a diverse group of AI experts from across financial services, technology companies and academia, along with other UK regulators and government ministries as observers. The AIPPF focused on three key topics: data, models, and governance since they are the three areas of the AI lifecycle where difficulties typically arise (the graphic below shows the interplay of these).

Greater complexity plus more and different data differentiate AI from previous technologies

The AIPPF discussions swiftly revealed just how much the combination of large datasets and complex AI systems can detect patterns and make predictions on an entirely new scale. The technology also offers the chance to analyse not just traditional financial data, but unstructured and alternative data from new sources like social media sites, satellite images, and shopping patterns. This has been made possible because AI techniques can transform unstructured data like words or images into structured data that algorithms can analyse. These new and expansive forms of data mark a fundamental change from previous analytical techniques and technologies.

As the AIPPF progressed, we found more changes in the AI models themselves. For one, AI systems can increase both the speed and the scale of model-based decisions. While this offers huge potential for efficiency gains, it can also amplify the risks and consequences of those decisions, especially if used in critical business areas. Because AI systems can now analyse unstructured data, they can also be used for business activities that didn’t previously use data analytics or modelling. Just consider the use of chatbots for customer services and marketing.

Dynamic data and AI models

The dynamic nature of some AI systems means they can continuously learn from live data, so both the models and their outputs can change at any given moment. This is particularly relevant in the context of Covid.

Take the example of anti-money laundering. Many of these AI models are based on behavioural data and their performance dipped when consumer spending habits changed, with almost all payments shifting to “card not present” transactions overnight. The ability to analyse unstructured data and continuous learning marks a significant change from previous models and brings new challenges when it comes to managing the risks associated with AI.

AI’s complexity versus explainability

Whilst this complexity often delivers greater predictive accuracy compared to more traditional techniques, it is also much harder to explain how models arrive at decisions (the so-called ‘black box problem’). It can be hard to fully understand this complexity for a single AI algorithm, model or system.

It gets even harder to fully understand multiple, interconnected AI systems, where the outputs of one system become the input of another. For example, an AI system could analyse multiple text documents (such as board packs) to produce quantitative signals or metrics as outputs, which could then be used as certain inputs for an AI trading system to help determine the asset allocation process. Similarly, the risks may be greater for networks of AI models operating within financial markets, especially as they could pose risks to financial stability. All of which raises fundamental questions about the transparency of AI systems and the governance around them.

AI’s many governance questions

In all of the AIPPF discussions, the topic of governance was constant because it is crucial to addressing many data and model-related challenges. Even more importantly, AI poses a fundamental challenge to the notion of governance itself since the technology has the potential for autonomous decision-making. This kind of development can have profound implications for accountability and responsibility. AIPPF members stressed that most AI systems currently support human decision-making, rather than replace it.

That said, the potential for greater autonomous decision-making raises a whole host of new challenges – from the need to have a clear framework for ensuring accountability and responsibility for AI systems inside firms, to more holistic approaches to data governance, including addressing ethical considerations, and even implications for regulatory requirements, such as the Senior Managers and Certification Regime.

The use of AI also requires different skills, which are scarce across firms and in the wider economy, and the current skills gap touches every level of decision-making, from data scientists to senior managers to board members.

Better governance and international coordination for data and AI systems

Since AI may amplify risks, firms may need to adjust their approaches to risk management and governance where AI is concerned. Regulators can also support the safe adoption of AI in financial services. The AIPPF members suggested regulators provide guidance on how existing regulations and policies apply to AI.

While many data considerations are not specific to AI, those linked to data quality and a real understanding of data’s attributes are of paramount importance to its safe adoption. Yet some attributes, like representativeness, are not always included in data quality metrics and may need to be added. To this effect, AIPPF members pointed out that firms will need to focus much more on documenting, versioning, and the continuous monitoring of data. This suggests that firms may need to develop data strategies to ensure the safe adoption of AI.

Similarly, regulators may need to consider how they can support these developments. For example, it could be useful to have additional guidance on existing data regulation in the UK and abroad that addresses data quality aspects like representativeness. Transnational coordination between regulators would also help to standardise solutions offered by both data suppliers and marketplaces, which could enable the further safe adoption of AI.

Monitor and report on AI model performance for risk management

When it comes to the AI models themselves, AIPPF members underlined how the speed, scale and complexity of AI may require new approaches to risk management and governance. In particular, the complexity of AI models may push firms and regulators to improve the ‘explainability’ of the models, although the level of explainability could differ depending on the use case and affected group of consumers.

On top of these challenges, identifying and managing AI model change, as well as monitoring and reporting model performance, are key parts of effective AI risk management and governance, not least to sound the alert when a model needs to be ‘retrained’. As indicated in the OECD’s work on the classification of AI systems, future approaches to managing and regulating the risks related to AI models may need to focus on the behaviour and outputs of the models once they are running, rather than just their static description or regulating input factors.

When it comes to AI governance, existing frameworks and structures provide a good starting point. Not least because the use of AI means there is considerable overlap with existing data, model and operational risk management functions. The Senior Managers and Certification Regime plays a particularly important role in creating strong conditions for good data governance, models and outputs, as well as the accountability of key decision-makers. Perhaps most importantly, firms and regulators need to ensure that their whole organisation has the right level of understanding and awareness of the relevant benefits and risks of AI. This could include having a common understanding or classification of AI models, algorithms, and systems within the firm.

Consensus and clarity will be key to adopting AI in financial services

Much like the work currently happening at the OECD, the AIPPF demonstrated the importance of open dialogue between the public and private sectors, especially when it comes to technologies like AI. Working together allowed us to explore how we can build a broad-based consensus around how best to use, govern and regulate AI in financial services. Transparency is also key and we encourage other regulators, firms, academics, and members of the public to read the AIPPF’s final report and engage with the wider debate on AI.

Again, regulators have a role to play in supporting the safe adoption of AI in financial services. The AIPPF report reflects the views of the members as individual experts, rather than their organisations, and it does not reflect the views of the Bank or the FCA. Therefore, the Bank of England and the FCA will publish a discussion paper on AI later this year to build on the AIPPF’s work and broaden engagement with a wider set of stakeholders. The discussion paper will aim to provide clarity around the current regulatory framework and how it applies to AI, ask questions about how policy can best support further safe AI adoption, and ask stakeholders to share their views. In the meantime, we will continue to work with the UK government and regulators there and elsewhere to support the safe adoption of AI.

We don’t have all the answers to the challenges that AI modelling, data, and governance present, but we will continue to work on them. The AIPPF’s discussions suggest we are developing some of the right questions and that is a good start for finding answers. We would encourage everyone to read the AIPPF final report and keep an eye out for the discussion paper later this year.