AI in financial services – opportunities for putting OECD frameworks into action

At Emerj, our research involves dozens of one to one interviews with heads of AI at large financial services organizations (from US Bank to HSBC to Citibank), and direct interview access with AI fintech startups in the USA and EU, and literally hundreds of disruptive companies in the financial services and banking ecosystems.

As a member of the OECD.AI Network of Experts, I am excited to see more of the OECD’s work make its way into the C-suite, creating a real impact for responsible adoption, and for beneficial innovation.

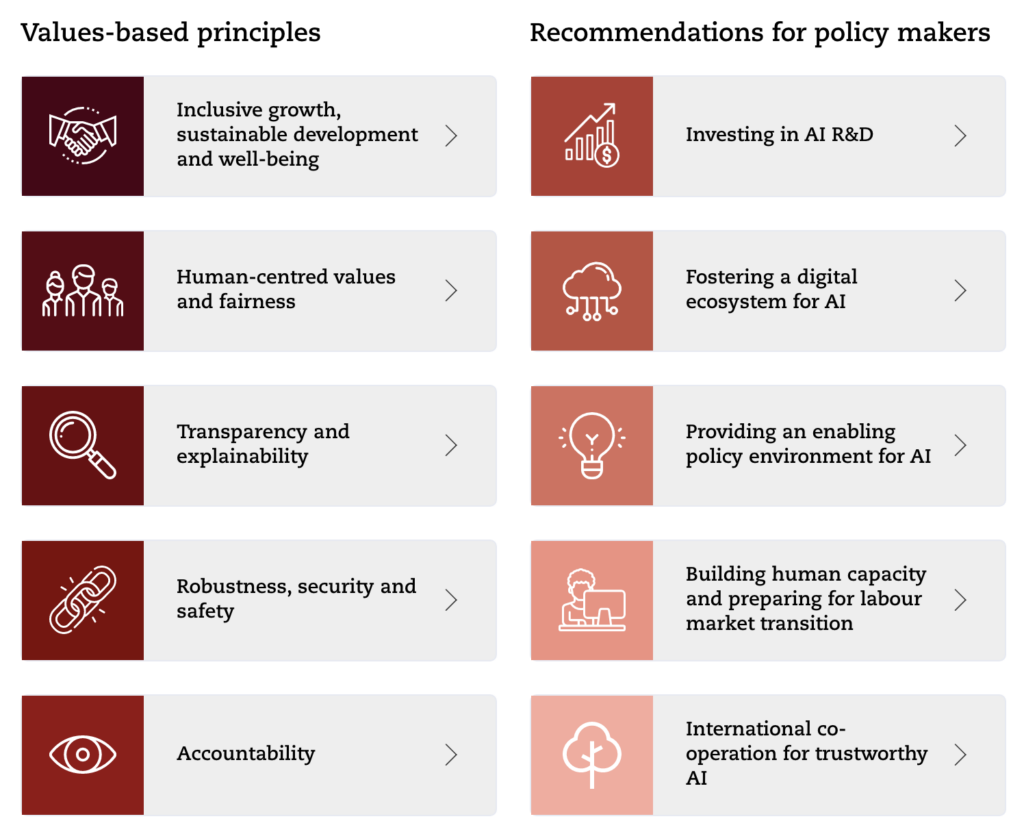

Ideally, the OECD AI Principles and other AI frameworks and models should help OECD nations to both strengthen and preserve their values and privacy, while also bolstering AI innovation and adoption. In this post we’ll cover two of the most important near-term opportunities that we see for the OECD’s AI work to influence the financial services industry.

Risk-related AI spend in FinServ is an opportunity for fairness

The trend I would like to double down on is the fact that the preponderance of AI investments and real AI traction has to do with backend processes, while forward facing, or customer facing applications such as chatbots, new mobile interfaces, marketing approaches tend to get a lot of buzz and a lot of hype and tend to make banks look very modern and hip.

The place where the money is actually going is in risk related functions, that is to say, compliance, cybersecurity and fraud. We see safely over half of the funds raised across the AI and banking disruptive ecosystem, when it comes to AI, go into these areas. And this presents two opportunities for the OECD.

One is a place to breathe life into some of the OECD’s principles around fairness and reducing bias. Some of these processes – lending being very high up there – and on some levels certain elements of cybersecurity, can be biased in ways that would break the law or break the values of the banks that are operating these systems. These are great areas where they can turn the OECD principles into action.

AI ethics makes execs “walk on eggshells” – levels of assessment are needed

Second, we see a huge amount of the financial services ecosystem walking on eggshells when it comes to adopting AI because everything feels like it’s going to be a PR gaffe or risk because it feels like any AI system they invest in will inevitably be wildly biased.

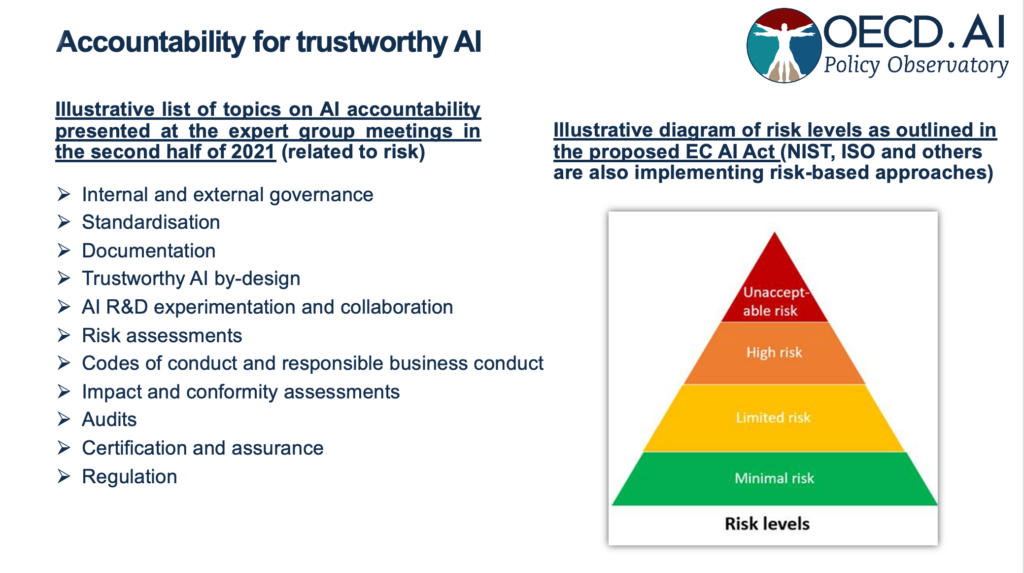

While an awareness of real AI bias risks is helpful – not all AI applications pose serious racial or gender bias risk, and fear won’t solve the problem. The OECD has done some great work on being able to rank and order applications based on how much risk they actually posed.

There may be very innocuous and low risk applications that can add value to the company, to the shareholders and to the customers that we should feel confident moving forward with. But there are others that do involve more scrutiny.

Being able to gauge these applications rationally means that executive leadership can place due process ahead of genuinely risky AI applications, and they can also move ahead with confidence (and without expensive and often useless trepidation) when no real threat is posed.

Models of this kind will only become more relevant as AI adoption picks up steam, and we’re excited to see further development in gauging AI risk levels.