AI has made its way to the workplace. So how have laws kept pace?

On the first day of our Ethical Technology Practicum, a graduate school course at Duke University that addresses ethical and legal issues concerning Artificial Intelligence (AI), our professor asked us if we had been exposed to automated hiring practices. Almost all of our peers said yes. As a general-purpose technology, AI has found a role in the employment context, like in so many other domains.

Companies have developed AI systems to monitor warehouse and factory floor conditions with computer vision systems that identify potential hazards before an accident occurs. Employers flooded with resumes have turned to AI systems to streamline the hiring process. Employers can leverage AI to identify characteristics of flight risks amongst staff and address them before losing valuable team members. Some employers in the gig economy even utilise AI to manage their entire workforce and set working conditions.

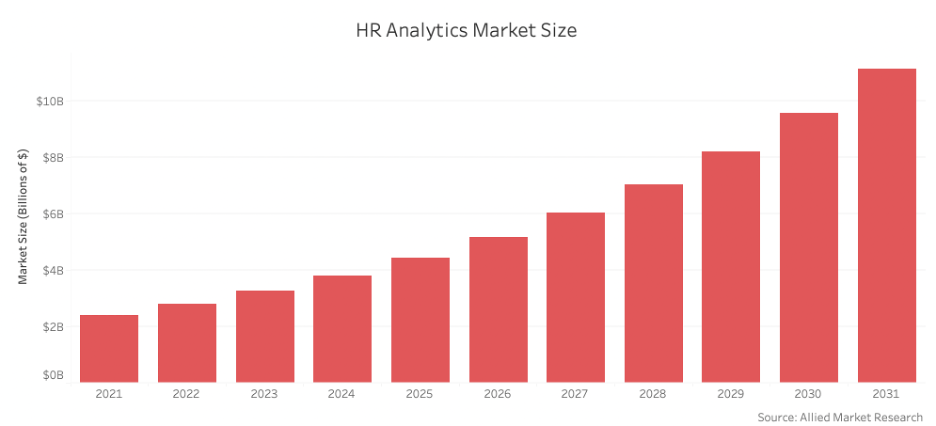

The use of AI in the workplace has proliferated in recent years. In 2017, a globally inclusive survey found that over 69% of companies with at least 10,000 employees have an HR analytics team that uses automated technologies to hire, reward, and monitor employees. Entire companies are now dedicated to providing these services. Many projections see this industry growing over the next ten years, from an estimated global market size of USD 2.4 billion in 2021 to over USD 11 billion by 2031.

Risks and regulation of AI in the workplace

While AI can benefit the workplace through safer environments and improved HR efficiency, there are risks. Historical discriminatory hiring practices can become reinforced at scale, while increased workplace monitoring can jeopardise individual rights to self-determination. When it comes to automated workplace decisions, some hold enormous influence over individual well-being. Under the wrong conditions, they can erode human-centred values and fairness.

Unfortunately, laws and regulations have not kept up with the rapid growth of AI in the employment context. Without new regulations, countries rely largely on creatively applying existing workplace laws to new technologies, such as electronic monitoring, and data privacy laws.

In partnership with OECD.AI, our team has identified and documented instances of emerging regulations, case law, and guidance across jurisdictions in the employment context. This post presents a synthesis of key trends and themes primarily across the United States and Europe.

Source: Allied Market Research

Regulators in the United States target data privacy and surveillance, and anti-discrimination

Data privacy and surveillance

Regulators in some jurisdictions have imposed consent and notice requirements for using AI in the employment context. In the United States (and one province in Canada), three states have implemented laws that require employers to notify employees of electronic monitoring, including AI-powered technologies. In New York, Delaware, and Connecticut, employers must provide written notice to all employees whose phone calls, emails, or internet usage will be monitored.

Regulators in some states have imposed consent and notice requirements for using AI in interview tools. In Maryland, an employer cannot use facial recognition technology during interviews unless the interviewee signs a waiver. In Illinois, employers must inform candidates that AI will be used, explain how it works, and obtain written informed consent.

The White House Office of Science and Technology issued the Blueprint for an AI Bill of Rights in October 2022. The document is intended to support the development of policies that protect civil rights and democratic values in the development and use of automated systems. It specifically mentions the need for data privacy in the employment context.

Anti-Discrimination

Regulators are targeting intentional and inadvertent discrimination by automated decision-making. The Equal Employment Opportunity Commission (EEOC), a government agency charged with administering and enforcing laws against workplace discrimination, launched the Artificial Intelligence and Algorithmic Fairness Initiative in 2021. The Initiative seeks to ensure that the use of AI and other emerging technologies comply with federal civil rights laws.

So far, the agency has issued technical guidance that covers how the Americans with Disabilities Act may apply to AI in private-sector employment-related decision-making. It also brought a lawsuit against a tutoring service that programmed its online recruitment software to reject older applicants automatically because of their age.

In 2022, the United States Department of Justice acted in tandem with the EEOC to issue technical guidance for using certain AI systems in employment tools that risk violating anti-discrimination laws in the public sector.

The United States’ AI Bill of Rights also highlights the risk of discrimination by automated decision tools. It calls out the presence of discrimination in automated resume screening tools.

In New York City, automated decision tools that replace or substantially assist in hiring or promotions decision-making must undergo annual bias audits. Employers must make the audit results publicly available, notify employees and candidates that these tools will be used, and make available an alternative selection process for those who do not want their application to be reviewed by such tools.

European data protection authorities apply regulations in platform work and teleworking

Europe’s regulatory landscape after GDPR

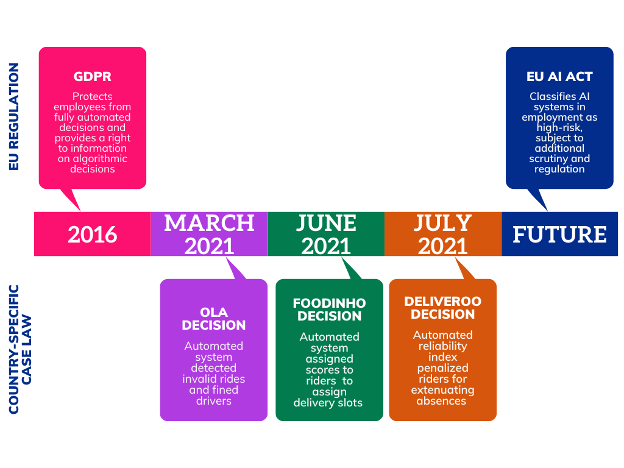

The European Union continues to work on an Artificial Intelligence Act (AI Act) to regulate AI. Initial proposals have presented a risk-based approach in which high-risk applications are subject to heavy scrutiny and regulation. AI systems used in “employment, management of workers, and access to self-employment” are considered high risk, given their influence on employment opportunities and general livelihoods.

The EU’s General Data Protection Regulation (GDPR) already limits the use of AI in employment. Article 22 of the GDPR ensures that individuals are not subject to decisions “based solely on automated processing” in contexts that include determining employment conditions. Other GDPR provisions apply to automated employment decisions, such as Article 13, which requires employers to disclose the existence of automated decision-making when collecting personal data.

Platform work ripe for enforcement

Platform workers carry out tasks on-demand for clients as mediated by digital platforms, such as Uber. Many prominent platform employers use algorithms to determine working conditions. Consequently, much of the enforcement of Article 22 of the GDPR in the employment context has centred on platform work employers. However, such enforcement sets precedents for all employers.

In a landmark case decided in July 2021, the Court of Bologna, Italy found that Deliveroo delivery drivers had been discriminated against based on an automated reliability index. The reliability index exhibited significant influence on the shifts offered to delivery drivers but did not take extenuating circumstances into account for absences that negatively impacted their reliability score.

In June 2021, Foodinho, another platform delivery firm, was found to violate several GDPR provisions in its algorithmic management of workers. Foodinho failed to provide riders with the right to contest algorithmic decision-making or request human alternatives on decisions that scored them and impacted their employment opportunities. Foodinho also violated Article 13 of the GDPR, failing to disclose the logic and motivation for processing personal data.

In March 2021, Ola, a rideshare company, was also found to violate Article 22 of the GDPR for using an automated AI system to detect invalid rides and, consequently, fine drivers. These enforcement examples illustrate the European Data Protection Authorities’ efforts to ensure fairness for workers governed by algorithmic decision-making.

In May 2021, The Spanish Government approved a royal decree known as the Rider Law that requires employers using algorithmic decision-making to disclose key attributes of the algorithm to employee representatives. These attributes include the algorithm’s parameters and general logic used to make decisions, a significant leap in regulation beyond the GDPR.

Telework monitoring gains prominence

In response to the Covid-19 pandemic, millions of individuals worldwide shifted to teleworking in some capacity. To track productivity, companies dramatically increased their demand for monitoring software, such as desktop monitoring and keystroke tracking. The influx of these AI-powered surveillance tools raises legal and ethical questions about employee privacy and fairness, causing multiple European countries to prioritise publishing guidance and increasing regulation.

For instance, the Norwegian Data Protection Authority published clear best practices for employers considering using digital monitoring tools. Portugal’s Data Protection Authority (CNPD) also published explicit guidelines on the limits of employee monitoring. The guidelines expressly prohibit remote surveillance unless special circumstances apply, specifically mentioning the unlawfulness of monitoring keystrokes, web history, and physical location.

Australia publishes guidance and enforces existing privacy laws

Australia’s AI Ethics Principles contain soft laws on designing, developing, deploying, and operating AI systems. The policy advises companies to hire from diverse backgrounds, cultures and disciplines to ensure a wide range of perspectives and to minimise the risk of overlooking important considerations. The policy does not address the use of AI in the workplace, but some government regulators have begun to address the issue.

The Office of the Australian Information Commissioner (OAIC) opened an investigation into the personal information handling practices of Bunnings Group Limited and Kmart Australia Limited, focusing on the companies’ use of facial recognition technology. In 2019, a court in Australia upheld an appeal from a sawmill employee, concluding that he was unfairly dismissed for refusing to use fingerprint scanners to sign in and out of work.

Many countries still do not have regulations on the use of AI in the workplace

The scope of our research extended beyond the countries discussed above. However, we found that many countries have yet to regulate the use of AI in the workplace.

In Latin America, Chile, Colombia, Brazil, and Mexico have developed some soft law guidance on AI, but none directly address AI in the workplace. Likewise, Turkey, South Korea, and Japan have guidance on AI, but none mention the use of AI in the workplace.

New Zealand and Israel are still developing initial guidance on the use of AI and have yet to address whether their guidance will cover AI in the workplace.

The OECD AI Principles can guide future regulation

As the development and use of AI continue to grow, countries must continue to monitor policy developments in this field. The existing policies function as a starting point, but regulators should monitor these policies to evaluate their effectiveness in the long term. Here, we have provided some assessments on current regulations in the employment context, not a comprehensive report on all 38 member states’ laws.

OECD member states have committed to ensuring that the use of AI adheres to the OECD AI Principles, which promote using AI in ways that are innovative and trustworthy, and that respect human rights. These principles are critical in the employment context. The values can guide regulators as they address the risks of discrimination, opacity, and data security issues in the employment context. Future regulations will determine whether companies stand to benefit from emerging technologies while honouring employees’ rights. Will policy makers get it right, or will they continue to chase technological advancements with the law of yesteryear?

This blog post comes from a partnership between the Duke University Initiative for Science & Society and OECD.AI as part of the Ethical Technology Practicum.

The Ethical Technology Practicum is a course designed and taught by Professor Lee Tiedrich at Duke University through the Duke Science and Society Initiative. Students in the Law and Public Policy Programs collaborated with OECD.AI to research the use of AI in the workplace. This blog post and student work are provided for informational purposes only and do not constitute legal or other professional advice.

It also informs the German-supported OECD programme on AI in Work, Innovation, Productivity and Skills, which aims to help ensure that AI enters the world of work in effective, beneficial and human-centric ways that are acceptable to the population at large.

For more information, visit: