How countries are implementing the OECD Principles for Trustworthy AI

Since signing on to the OECD AI Principles in 2019, countries have been using them as guidance to craft policies to tackle AI risks and capitalise on opportunities. The Principles are a global reference and the first intergovernmental standard for trustworthy AI, reflecting the fundamental challenges and opportunities for economies and societies.

The OECD report The state of implementation of the OECD AI Principles four years on, takes stock of national initiatives to implement the OECD AI Principles worldwide. It overviews national AI strategies, monitoring bodies, expert advisory groups, and monitoring and evaluation frameworks. It reviews regulatory approaches to date and provides examples of initiatives related to each AI principle so policymakers can learn from one another.

Here is a quick look at the report’s most important findings.

Countries worldwide are adopting national AI strategies

In 2017, only a few countries had national AI strategies. Today, the OECD.AI Policy Observatory contains over 50 national strategic and government-wide initiatives for trustworthy AI (Figure 1). All OECD members and partner countries in the Arab region, Africa, and South America are promoting the OECD AI Principles. By May 2023, governments reported more than 930 policy initiatives across 71 jurisdictions in the OECD.AI national policy database.

Governments are using different governance models to manage national AI policies. Some countries have created governmental coordination bodies for AI. The United Kingdom founded the Government Office for AI, a unit within the Department for Science, Innovation and Technology, and the United States established the Artificial Intelligence Initiative Office (NAIIO), located within the White House Office of Science and Technology Policy.

Other countries tasked existing ministries and established AI inter-ministerial and multi-stakeholder committees to oversee the development and implementation of AI strategies. Examples of this approach include the Governance Committee of the Brazilian AI and Egypt’s National Council for AI (NCAI).

Outside of government, countries are establishing multi-stakeholder groups of AI experts to advise and report on the current and future opportunities, risks and challenges for the public use of AI. France’s National Consultative Committee on Digital Ethics and AI (FNCDE) and Canada’s Advisory Council on AI are pertinent examples.

Countries like Germany and Canada established AI observatories to monitor and evaluate their AI strategy rollouts. While still scarce, these monitoring and evaluation mechanisms should expand across other countries as national AI strategies move into later stages of implementation.

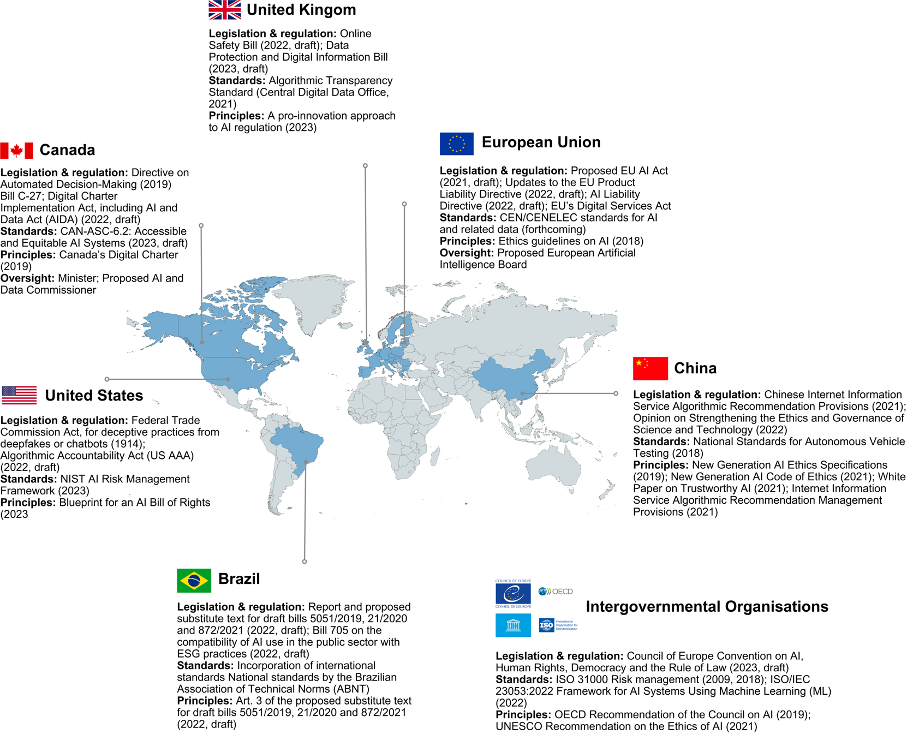

Ethical frameworks, hard and soft laws are among the regulatory frameworks countries use to ensure trustworthy AI systems

Countries are exploring different regulatory approaches to encourage trustworthy AI and mitigate the risks of AI systems. Existing legislation in other areas already regulates AI systems, and countries have started codifying OECD AI Principles and national AI strategies into AI-specific regulatory frameworks.

Several countries have national ethical frameworks and AI principles that align with the OECD AI Principles. In May 2023, 17 of these were included in the OECD database. Japan, Korea, and India provide guidance for developers and operators on how to implement the principles. Colombia has set up an online platform to monitor its framework’s implementation.

Second, many countries are considering AI-specific regulatory approaches (Figure 2). Others, such as Canada and the European Union, have proposed to regulate AI systems across domains and applications with comprehensive frameworks applicable to all sectors. The Committee on Artificial Intelligence of the Council of Europe is drafting a horizontal international legal instrument on AI.

The United States, the United Kingdom, Israel and China have opted for a sectoral approach by establishing cross-sectoral, non-binding principles. This leaves regulators to implement and enforce regulations in their respective sectors.

More broadly, countries are supporting international standardisation efforts. These include ISO’s ISO/IEC 23053 (2022), establishing its Framework for AI Systems Using Machine Learning and ISO 31000 for risk management.

Some countries promote controlled environments for experimentation through regulatory sandboxes, i.e., spaces where authorities engage firms to test innovative products and services that challenge existing legal frameworks. For example, in 2022, Spain created an AI regulatory sandbox as the first pilot programme to test the future proposed EU AI Act. The United Kingdom and Norway also launched regulatory sandbox initiatives that include AI.

Implementing values-based OECD AI Principles

Policy actions that put the OECD AI values-based principles into concrete, operational initiatives are the most relevant development in the last two years. The OECD AI Principles identify five complementary values-based principles for the responsible stewardship of trustworthy AI: (1.1) inclusive growth, sustainable development and well-being, (1.2) human-centred values and fairness, (1.3) transparency and explainability, (1.4) robustness, security and safety, and (1.5) accountability.

These core values guide national and international principles and policies, including emerging AI-specific legislation.

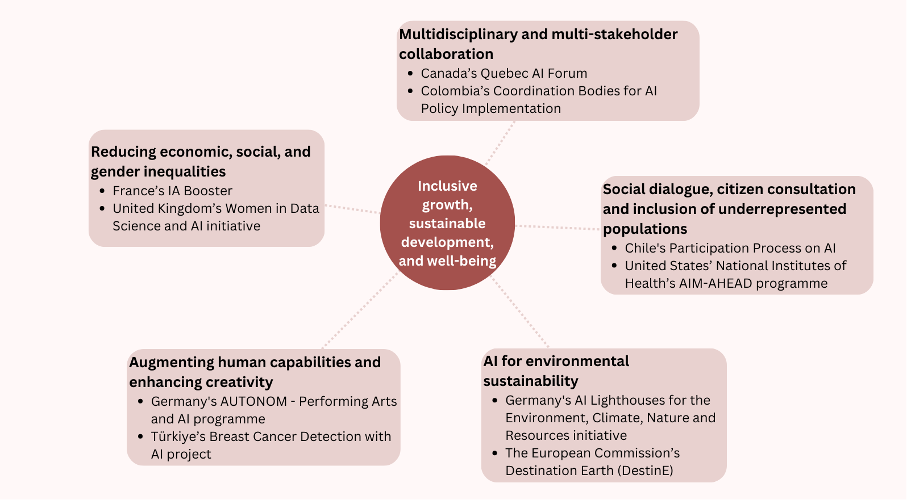

Inclusive growth, sustainable development, and well-being (Principle 1.1)

Governments are pursuing different approaches to achieve inclusive growth, sustainable development, and well-being. Most national AI strategies and AI ethics frameworks or guidelines for implementing AI refer to this principle.

At the policy level, countries have launched initiatives to ensure that stakeholders, including vulnerable groups, are involved in policy design and benefit from AI systems, including through initiatives to augment human capabilities and enhance creativity. Governments are also funding projects that use AI to address environmental challenges.

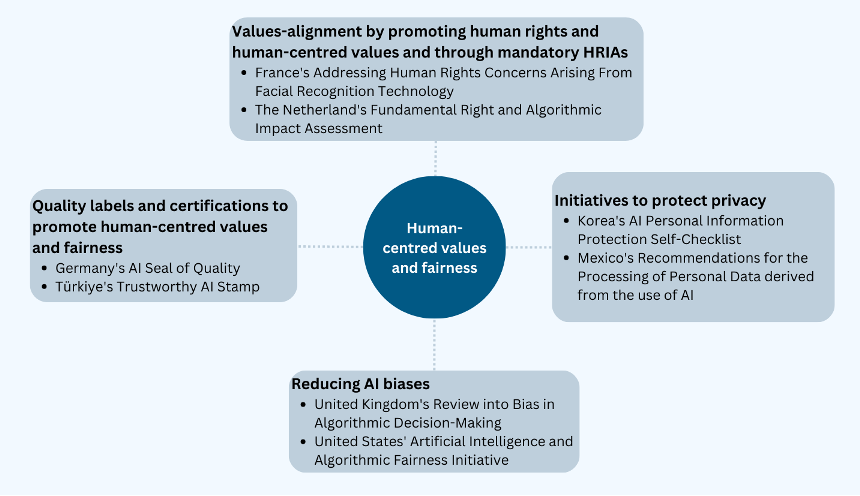

Human-centred values and fairness (Principle 1.2)

To implement principle 1.2, many governments have issued primarily non-binding guidelines and initiatives to reduce AI biases and promote human rights and human-centred values. By contrast, only some have developed Human Rights Impact Assessments (HRIA) and quality seals.

Transparency and explainability (Principle 1.3)

Governments are taking various approaches to ensure AI transparency, ranging from guidelines for implementing AI to oversight bodies. Regulatory bodies have recognised the importance of AI transparency and explainability. Transparency provisions are already established in existing legislation in areas like data protection, privacy and consumer protection legislation. They are included in proposed AI-specific regulations with specific provisions for the workplace. In the public sector, governments are enhancing transparency around using AI for public services through initiatives such as AI registers.

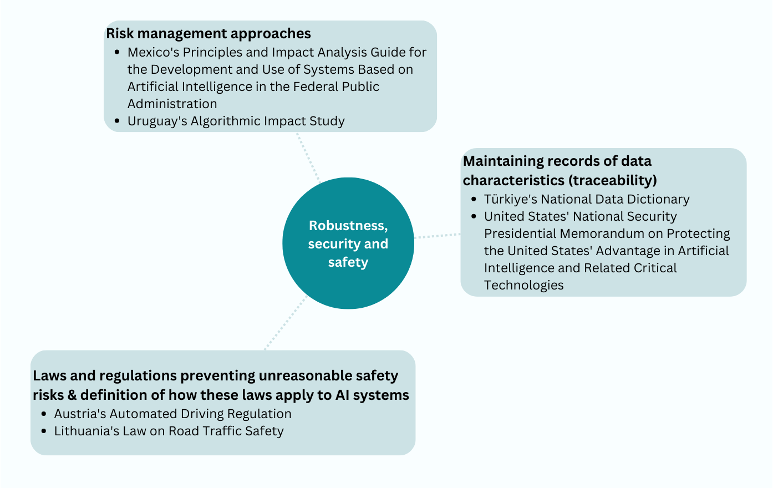

Robustness, security, and safety (Principle 1.4)

Countries are drawing on guidelines, ethics frameworks, impact assessments, new legislation, amendments to existing legislation and other instruments to implement Principle 1.4. Here, the focus is primarily on traffic laws and regulations intended to prevent unreasonable safety risks and how these apply to AI systems. In addition, countries develop risk management approaches and mechanisms to maintain records of data characteristics.

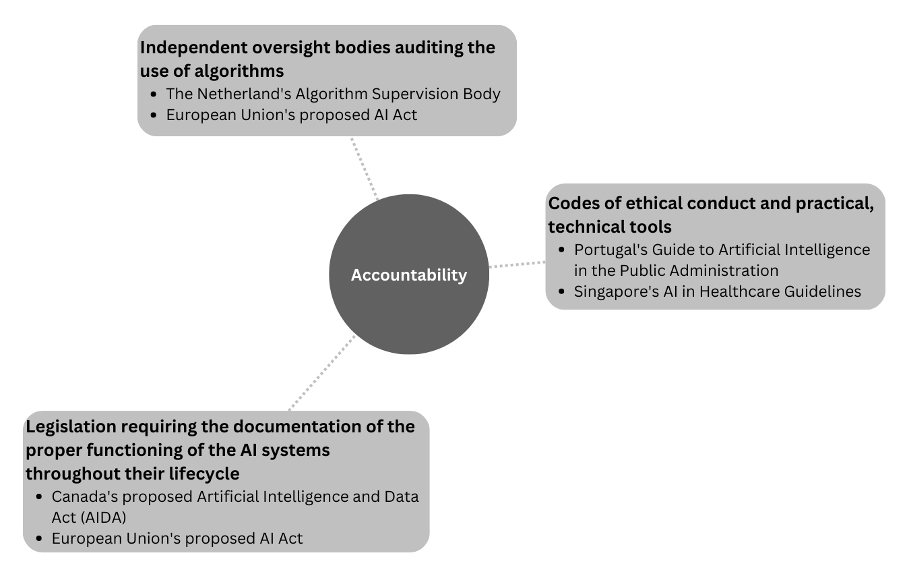

Accountability (Principle 1.5)

Countries have developed codes of ethical conduct for using or implementing AI in several sectors (public administration, health care, autonomous driving). It is important to note that the proposed AI-specific regulation requires documentation that explains the AI system’s proper functioning throughout its lifecycle. Lastly, countries have established independent oversight bodies to audit algorithm use.

| Canada | Proposed Artificial Intelligence and Data Act (AIDA) |

| European Union | Proposed AI Act |

| Portugal | Guide to Artificial Intelligence in the Public Administration |

| Singapore | AI in Healthcare Guidelines |

| The Netherlands | Algorithm Supervision Body |

Implementing the five recommendations to governments

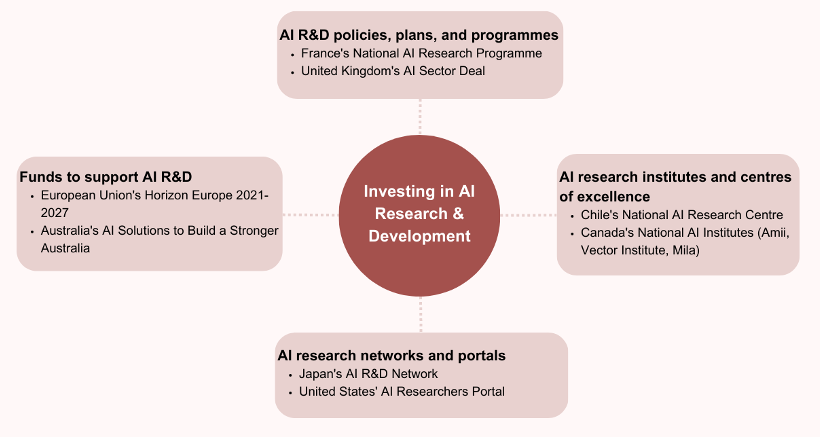

Investing in AI Research & Development (Principle 2.1)

Many countries recognise the importance of policies to support AI R&D and have put forward initiatives to ramp up these efforts. Most national AI strategies focus on AI R&D as one of the key areas for action. Countries have dedicated AI R&D funding and support it through different instruments. Main trends include launching AI R&D-focused policies, plans, and programmes, supporting the creation of national AI research institutes and centres, and consolidating AI research networks and collaborative platforms.

| Australia | AI Solutions to Build a Stronger Australia |

| Canada | National AI Institutes (Amii, Vector Institute, Mila) |

| Chile | National AI Research Centre |

| European Union | Horizon Europe 2021-2027 |

| France | National AI Research Programme |

| Japan | AI R&D Network |

| United Kingdom | AI Sector Deal |

| United States | AI Researchers Portal |

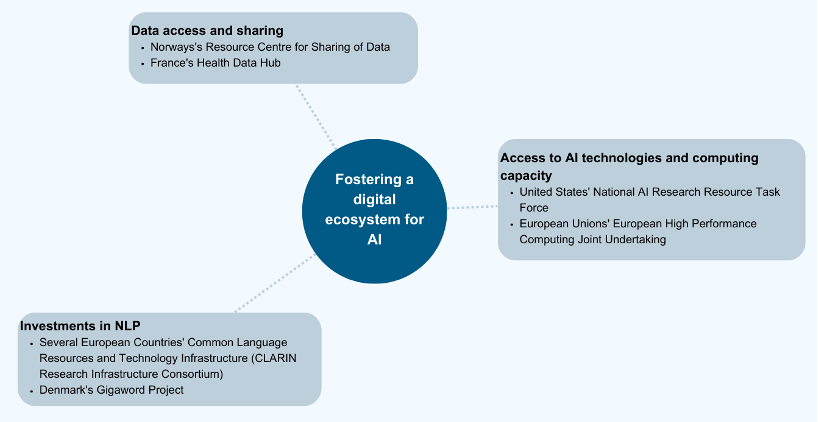

Fostering a digital ecosystem for AI (Principle 2.2)

Embracing AI-enabled transformation depends on the availability of data, infrastructure, and software to train and use AI models at scale. Thus, countries must ensure sufficient AI compute capacity to meet their needs and capture AI’s full economic potential. Fostering a digital ecosystem for AI represents a crucial component of countries’ efforts to advance AI adoption. Efforts include linking AI policies to data access and sharing policies. This strengthens efforts to increase computing capacity, access to infrastructure and investment in NLP technologies.

| Denmark | Gigaword Project |

| European Commission | European High Performance Computing Joint Undertaking |

| France | Health Data Hub |

| Norway | Resource Centre for Sharing of Data |

| Several European Countries | Common Language Resources and Technology Infrastructure (CLARIN Research Infrastructure Consortium) |

| United States | National AI Research Resource Task Force |

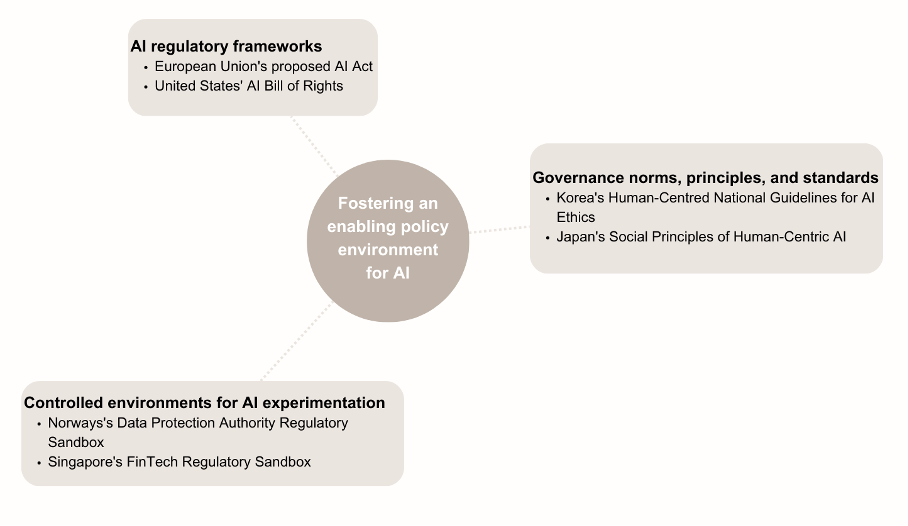

Fostering an enabling policy environment for AI (Principle 2.3)

Countries encourage trustworthy AI use and innovation by reviewing and adapting existing policies and legislations and adopting AI-specific regulatory frameworks. They also support an agile transition from R&D to commercialisation or deployment of AI by providing controlled environments for experimentation and testing of AI systems.

| European Union | Proposed AI Act |

| Japan | Social Principles of Human-Centric AI |

| Korea | Human-Centred National Guidelines for AI Ethics |

| Norway | Data Protection Authority Regulatory Sandbox |

| Singapore | FinTech Regulatory Sandbox |

| United States | AI Bill of Rights |

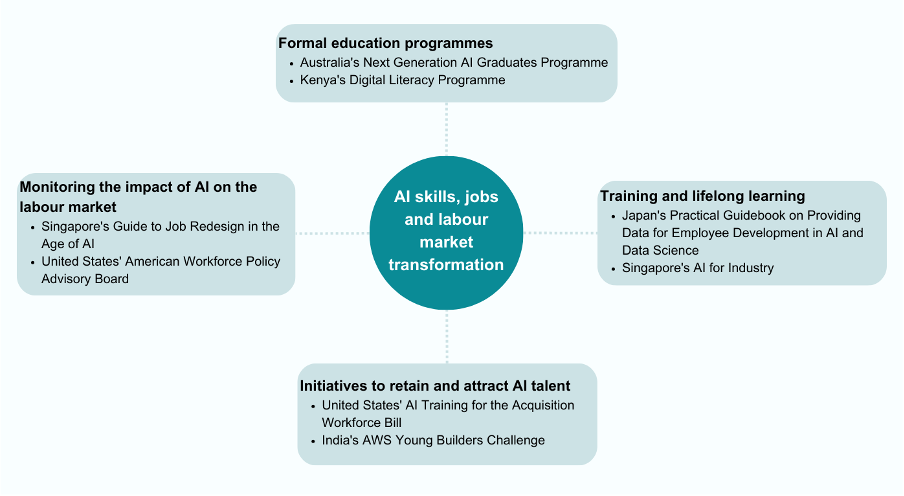

AI skills, jobs and labour market transformation (Principle 2.4)

Governments have put initiatives in place for the labour market’s transition and to provide the workforce with the necessary skills for AI through formal education programmes, vocational training, and lifelong learning initiatives. They also launched initiatives to attract and retain AI talent and monitor AI’s impact in the labour market.

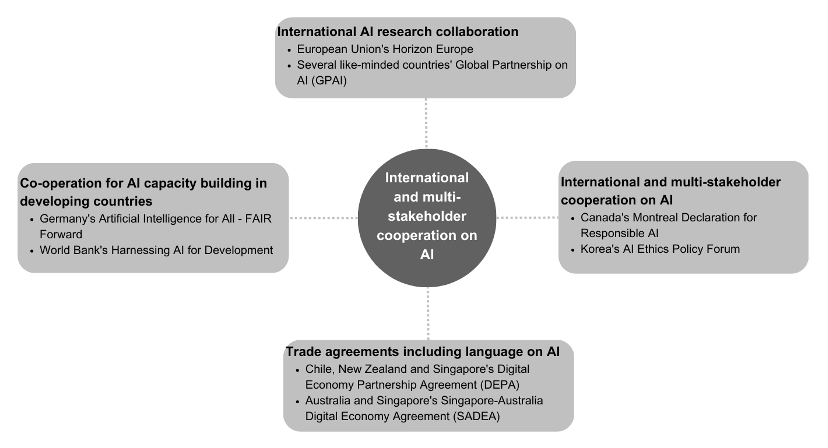

International and multi-stakeholder cooperation on AI (Principle 2.5)

Countries are engaging international cooperation to promote the beneficial use of AI and address its challenges. This is happening through several types of initiatives, including: i) international research collaborations on AI, ii) trade agreements including language on AI, and iii) cooperation for AI capacity building in developing countries.

| Australia and Singapore | Singapore-Australia Digital Economy Agreement (SADEA) |

| Canada | Montreal Declaration for Responsible AI |

| Chile, New Zealand and Singapore | Digital Economy Partnership Agreement (DEPA) |

| European Union | Horizon Europe |

| Germany | Artificial Intelligence for All – FAIR Forward |

| Korea | AI Ethics Policy Forum |

| Several Like-Minded Countries | Global Partnership on AI (GPAI) |

| World Bank | Harnessing AI for Development |

At the G7 Summit meeting in May 2023, hosted in Hiroshima, leaders agreed to task their digital and tech ministers to establish the “Hiroshima AI process”, where G7 members continue to discuss generative AI inclusively, in cooperation with the OECD and GPAI by the end of 2023 (Ministry of Foreign Affairs of Japan, 2023). On 7 September 2023, G7 Digital and Tech Ministers issued a statement (MIC, 2023) endorsing: 1) a report by the OECD summarising a stocktaking of priority risks (OECD, 2023), challenges and opportunities of generative AI based on priorities highlighted in the G7 Leaders’ Statement, (2) work towards international guiding principles applicable for all AI actors, (3) developing a code of conduct for organisations developing advanced AI systems, to be presented to the G7 Leaders and (4) project-based cooperation in support of the development of responsible AI tools and best practices